This is the sixth, and final, Informatics Column describing our findings related to nurses’ perception of the American Nurses Association (ANA)-recognized, standardized, nursing terminologies. In this column, we will present the evaluation of each terminology given by nurses who have indicated that they have used the specific terminology. The terminologies considered are listed in the Box below.

Box. ANA-Recognized Standardized Nursing Specific Terminologies

|

NANDA - North American Nursing Diagnosis Association NIC - Nursing Intervention Classification NOC - Nursing Outcomes Classification Omaha – Omaha System CCC - Clinical Care Classifications PNDS - Perioperative Nursing Data Set ICNP - International Classification of Nursing Practice SNOMED - Systematized Nomenclature of Medicine LOINC - Logical Observation Identifiers Names and Codes ABC – Alternative Billing Codes |

In the first column, we reported the demographics of our respondents and their familiarity with the ANA-standardized nursing terminologies (Schwirian & Thede, 2012). Next, we looked at the educational preparation nurses received for using the terminologies (Thede & Schwirian, 2013). The third column reported users’ perceptions of their confidence in using the terminologies ( Thede & Schwirian, 2013); while the fourth column assessed the effects of documenting with standardized nursing terminologies ( Thede and Schwirian, 2013). In the fifth column, we examined users’ perceptions of the helpfulness of the standardized nursing terminologies in clinical practice (Thede & Schwirian, 2014). In this last column, we will summarize these survey findings and report users’ overall evaluation of the ANA-recognized nursing terminologies. Only evaluation responses from those who answered yes to the question about whether they used a given terminology in some way, such as in school or in the clinical area, are reported here. All these users were asked “On a 5 point scale with 1 being FRUSTRATING and 5 EXCELLENT, how would you rate your experiences with X?”

The largest group of nurse respondents who said that they had used a terminology were NANDA users (368); however this group had the lowest percentage of users who evaluated their experience (92.39%) as seen in Table 1. Although the CCC terminology (40) had the fewest number of users, this group was near the top in the percentage of users (97.5%) who evaluated their experience with the terminology.

|

Terminology |

Number of Users |

Number of Users Who Evaluated the Terminology |

% of Users who Evaluated the Terminology |

|

NANDA |

368 |

340 |

92.39% |

|

NIC |

154 |

147 |

95.45% |

|

NOC |

126 |

122 |

96.83% |

|

Omaha System |

69 |

68 |

98.55% |

|

CCC |

40 |

39 |

97.50% |

|

PNDS |

69 |

65 |

94.20% |

|

ICNP |

27 |

25 |

92.59% |

|

SNOMED |

91 |

87 |

95.60% |

|

LOINC |

47 |

45 |

95.74% |

Evaluation Ratings

The ‘Evaluation Ratings’ are presented in Table 2. There was only a difference of two-thirds of a point on the five-point scale between the highest and lowest average evaluation for each terminology, with the standard deviation being about one for all the terminologies. NOC users responded with the lowest evaluation rating, while Omaha System users gave the highest rating. The standard deviation was the highest for the ICNP, and lowest for SNOMED.

|

Terminology |

Average Rating |

Std. Dev. |

|

Omaha System |

3.41 |

1.05 |

|

CCC |

3.33 |

0.96 |

|

PNDS |

3.2 |

1.02 |

|

LOINC |

3.31 |

1.14 |

|

SNOMED |

3.14 |

0.93 |

|

ICNP |

3.04 |

1.15 |

|

NANDA |

2.91 |

0.96 |

|

NIC |

2.86 |

1.02 |

|

NOC |

2.76 |

0.96 |

Evaluations by Groups of Users

To determine if, where, and how the terminology was used affected the evaluation rating, the results were broken down into a) school and clinical users, b) those who perceived that their education for use was adequate or inadequate, and c) users who either did or did not have follow-up education for using a terminology.

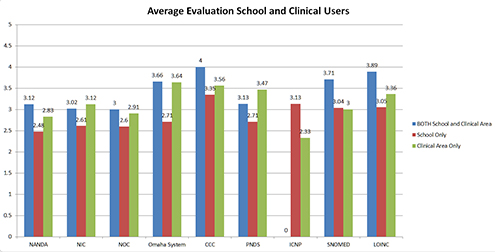

School and Clinical Use

Generally, those who used a terminology in both school and the clinical area were more positive in their evaluation of their experience with the terminology than those who used it in only one area (Figure 1). It is possible that this is due to being more comfortable using the terminology. Of those who used the terminology in only school or only the clinical area, clinical area only users rated their experience more positively than school only users. With the exception of a very small difference (3.64 for Omaha System and 3.56 for CCC) in clinical area only users, CCC users who used the terminology in school only (3.35) or both school and clinical area (4.00) rated their experience more positively than users of the other terminologies. The highest average evaluation for clinical area only users was Omaha System users (3.64). The lowest average rating was provided by ICNP’s clinical only users (2.33), but this terminology had the lowest number of users (27).

Figure 1. Average Evaluation for School and Clinical Users

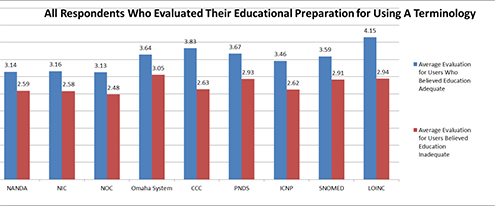

Educational Adequacy

It is not surprisingly that the manner in which terminology users rated their education for using a terminology correlated positively with their subsequent evaluation of a terminology. As would be expected, those who believed that their education to use a terminology was adequate rated their experience much higher than those who felt that their educational preparation was inadequate (Figure 2). The biggest differences were for users of the CCC (1.2 points) and LOINC (1.21 points) while the smallest differences were between NANDA (0.55 points), NIC (0. 58 points) and Omaha System (0.59 points) users.

Figure 2. Adequacy of Education

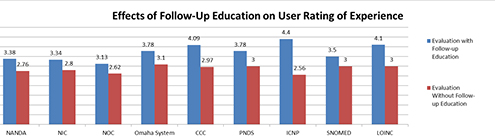

Follow-Up Education

Figure 3. Effects of Follow-Up Education

When follow-up education was provided, the users for all the terminologies increased their evaluation rating of their experience with a terminology (Figure 3). On the five point scale, users of the CCC who had follow-up education gave an evaluation rating 27% higher than those who had no follow-up. SNOMED users had the lowest percent of difference (14.29%). The increase in evaluation when there is follow-up education is similar to the increase in users’ perception of comfort levels in using the terminologies and labels as well as users’ confidence in their colleagues’ use of the terminology (Thede & Schwirian, 2013).

Advantages and Limitations of Online Surveys

Online surveys have both advantages and disadvantages. They are relatively inexpensive to administer and the data is immediately available for analysis electronically. Additionally they are convenient for respondents to answer. In many cases, one can use the flexibility offered online in designing the survey (Gingery, 2011; Kalb, Cohen, Lehmann, & Law, 2012; Sincero, 2012). For example, in a technique known as branching, one can direct a respondent to a given set of questions based on their response to a prior question. In our case, we made extensive use of this feature to try to limit responses that were analyzed to the responses of those who had indicated that they had a genuine knowledge of a terminology. A positive answer to ‘lead questions,’ (e.g., Have you used this terminology in a patient care area?) provided the respondent with questions that should only be answered by those who had used the terminology in the clinical area. Those who answered in the negative never saw those questions.

Unfortunately, we could only avoid the respondent seeing the dependent questions if they answered negatively; thus it was possible for a respondent to skip the lead question and still answer the dependent questions. To compensate for this difficulty we analyzed only the responses to dependent questions for respondents who affirmatively answered the lead question. We could have made the lead questions required, i.e., to not let the participants continue in the survey unless they provided an answer. However, we believed that an answer could be invalid if the participant was forced to answer, and that too many required questions would be off putting for respondents. Thus we elected to use only the positive responses to the lead question in evaluating answers to the questions that depended on providing positive responses to the lead question.

Like all online surveys, our survey also had disadvantages. Respondents were self-selected, and the researchers had no way of knowing if their answers were valid. Additionally one has to question if the respondents differed from non-respondents (Im & Chee, 2011), something that could not be deciphered. One can never be sure that a respondent has not responded more than once to the questionnaire. To see if this was prevalent, we checked the IP addresses of respondents for duplicates and found only two. In each case, we deleted the second one, realizing that doing so was questionable in that two different participants could have used the same computer. However with only two instances, any effect on results would be minimal. Additionally, it would have been possible for one respondent to use two different computers with different IP addresses, but this was a risk that we had to take. No survey method, unless personally administered by one who can identify all the participants, is 100% safe.

It is possible that some users of a terminology in an Electronic Health Record (EHR) are unaware of the fact that they are ‘using’ the terminology. This is because some EHRs, behind the scenes and without the knowledge of a user, code entered data into a terminology. This may be particularly applicable to the PNDS, CCC, SNOMED, or LOINC terminologies. Although not a problem that is unique to online surveys, in our case we sometimes wondered if some of our respondents were actually using a terminology, but because it was integrated into the electronic record (EHR) they were not aware of it, thus they did not answer questions about that terminology. Despite these possible limitations we believe that our respondents’ perceptions represent a fairly accurate picture of how nurses feel about terminologies.

Summary

Given that those who used a terminology in the clinical area generally found the terminology helpful (Thede & Schwirian, 2014), it would seem prudent to facilitate the use of a standardized nursing terminology by providers. Looking at the evaluations of all the terminologies, it would appear that how a user feels about a terminology is greatly dependent upon their perception about the adequacy of their preparation for using the terminology, both before and after it is used (Thede & Schwirian, 2013). It would also appear that using a terminology both in school and in the clinical area reflects positively in a user’s perception of the terminology.

This is the last column in this series of six columns reporting the results of the Second Survey about experiences and perceptions of users of Standardized Nursing Languages (SNLs). Compared with the First Survey (Schwirian & Thede, 2011; Schwirian, 2013), our Second Survey had approximately half as many respondents. In both surveys, the largest percentage of respondents had either a BSN degree (55.7% in Survey One and 55.6% in Survey Two) or a MSN degree (31.6% in Survey One and 36.8% in Survey Two). In Survey Two, 87.1% of our respondents were familiar with NANDA which was started in the United States (US), while the terminology that respondents were least familiar with was the ICNP (19%). This is not surprising given that most of our respondents were based in the US.

When one considers (a) the perception of clinical users who documented with a terminology and reported that using a terminology made the documentation easier and more understandable (Thede & Schwirian, 2013); and (b) that with the exception of the ICNP (50%), more than 60% of users found an SNL helpful in the clinical area (Thede & Schwirian, 2014), it would appear that the use of SNLs should be promoted. However, if SNLs are to be used accurately and comfortably by nurses, both comprehensive educational preparation as well as follow-up education in the use of SNLs needs to be provided. It may also be helpful to provide support in the clinical area by ‘super users.’

The one area from this survey that is disappointing is that very few nurses are able to use a terminology for their own personal research, in that they cannot pull up reports for many of their patients using specific terms. EHRs should be designed to provide practitioners with the ability to reflect accurately on their practice. Instead of relying on memory, which may often be selective, the ability to view data in the aggregate about patients for whom nurses provided care would allow nurses to be truly reflective of their practice. This ability could also create more interest among nurses in participating in nursing research.

Linda Thede, PhD, RN-BC

Email: lqthede@roadrunner.com

Patricia M. Schwirian, PhD, RN

Email: schwirian.1@osu.edu

Thede, L. Q., Schwirian, P, M. (2013, Dec 16). The standardized nursing terminologies: A national survey of nurses’ experience and attitudes—SURVEY II: Participants’ documentation use of standardized nursing terminologies. OJIN: The Online Journal of Issues in Nursing, 19(1). doi: 10.3912/OJIN.Vol19No